In a world where systems must handle millions of requests in real-time, load balancing is the unsung hero. It’s like the referee in a basketball game—moving quietly in the background, making sure everyone gets their turn and the game runs smoothly. Load balancing distributes incoming requests across multiple servers, ensuring no single resource is overwhelmed, keeping systems scalable, reliable, and efficient.

But not all load balancing strategies are created equal. Each has its quirks, just like choosing between LeBron James and Stephen Curry to make the final shot. This post dives into the art and algorithms behind load balancing, exploring the methods that keep systems humming, even under pressure.

What Is Load Balancing, Anyway?

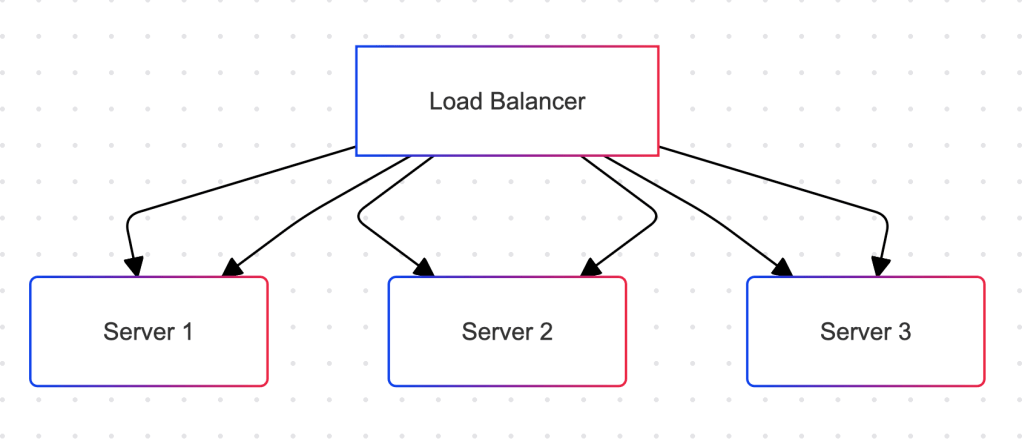

Load balancing is distributing incoming traffic or tasks across multiple resources—like servers, databases, or virtual machines—so no single component is overloaded. By spreading the weight evenly, we ensure high availability (the system is always up) and scalability (it can grow without breaking). Think of it like a chef preparing multiple dishes at the same time: if too many tasks fall on one chef’s shoulders, dinner will be a disaster. But with a little help from sous-chefs, everything stays on track.

Common Load Balancing Algorithms

Now let’s look at the different strategies that load balancers use. Some are straightforward, while others are more nuanced, but each serves a purpose depending on the type of workload or system you’re managing.

1. Round Robin: Everyone Gets a Turn

This is the simplest and most democratic approach. The load balancer sends each request to the next available server in a rotating order—just like passing the basketball around so everyone gets a touch.

When to Use It:

- Best for identical servers with equal capacity.

- Works well when tasks are small and uniform in size.

Drawback:

- It doesn’t consider current load—so if Server 1 is drowning in requests, it’ll still get more just because it’s “next in line.”

2. Least Connections: Give It to the Fresh Legs

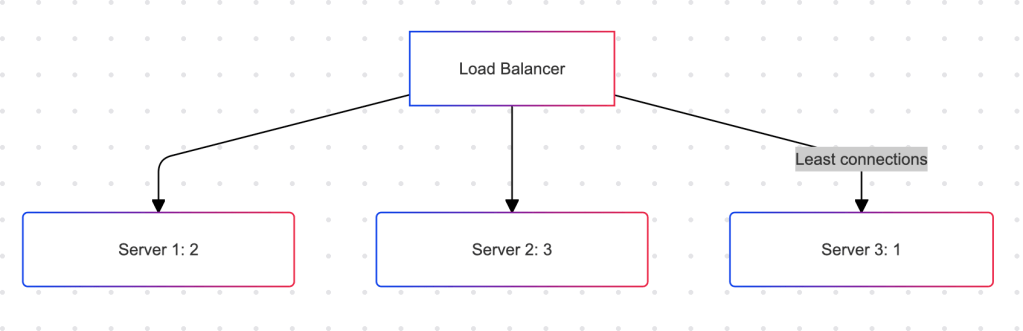

With this algorithm, the load balancer sends traffic to the server with the fewest active connections. It’s like spotting the teammate who isn’t winded and passing them the ball—they’re ready to take the next shot.

When to Use It:

- Ideal for dynamic workloads where some tasks take longer than others.

- Useful when servers vary in capacity.

Drawback:

- It can create overhead if the load balancer constantly monitors connections across servers.

3. IP Hash: Send Them Back to Where They Belong

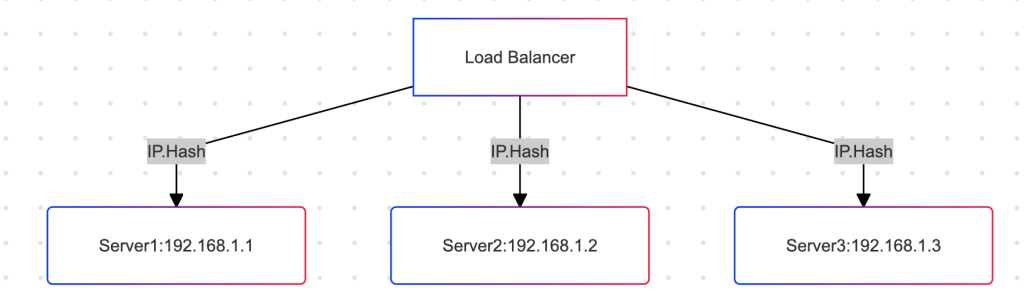

This algorithm uses the client’s IP address to determine which server will handle their request. It’s like assigning each fan a specific seat at a game—you always go back to the same place.

When to Use It:

- Useful when you need session persistence, like in online shopping carts.

- Ensures that requests from the same client always go to the same server.

Drawback:

- If a server goes down, those clients might have their sessions interrupted.

4. Weighted Round Robin: Not All Players Are Equal

In this strategy, the load balancer assigns a weight to each server based on its capacity. It’s like a basketball team where some players (servers) get more time on the court because they’re better at handling pressure.

When to Use It:

- Best when servers have different capabilities or processing power.

Drawback:

- Needs careful configuration—if weights aren’t set correctly, it can cause imbalances.

5. Random: Sometimes, You Just Wing It

This method distributes requests randomly to any available server. Think of it as drawing names out of a hat—sometimes you get lucky, sometimes not.

When to Use It:

- Useful when traffic patterns are unpredictable.

- Works well with identical servers.

Drawback:

- Randomness can occasionally create uneven distribution, leading to overloaded servers.

When Load Balancing Goes Wrong

Even the best load balancers can run into trouble. A misconfigured algorithm can lead to server overload, delays, or even downtime. That’s why monitoring tools are crucial—they provide insights into performance and help detect issues before they become critical.

The Perfect Load Balancer Doesn’t Exist

Choosing the right load balancing strategy isn’t a one-size-fits-all situation. It’s about understanding your system’s needs and applying the best algorithm for the job. Some systems benefit from the simplicity of Round Robin, while others need the precision of Least Connections or Weighted Round Robin. The key is flexibility—just as a good basketball team knows when to switch strategies mid-game.

The art of load balancing lies in spreading the weight evenly to avoid bottlenecks and downtime. By choosing the right algorithm for your workload, you ensure your system stays reliable, scalable, and responsive—even when the pressure is on.

Just like a well-coached team that knows how to distribute the ball, a well-balanced system ensures every request is handled smoothly, with no one part of the system taking on more than it can handle. So, the next time you’re managing a high-traffic app or a complex data pipeline, remember: keep the load light, spread the weight, and let every server get a touch.

Leave a comment